FogBugz used to be an issue tracking system and has evolved into a product management software.

- A Wiki application handles specs and communication between team members. This Wiki has been done extremely well, with a nice, friendly interface and some cool editing and comparison features.

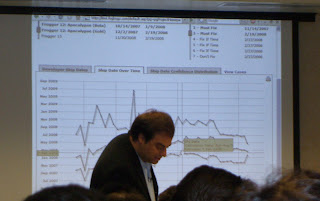

- A new feature called Evidence Based Scheduling (EBS) allows you to predict release dates, based on estimation from developers. Each developer estimates the time it'll take him/her to complete a task. The real time is measured as well.

As time goes by, your estimations are divided by the actual time, for each task. This produces a measurement called "Velocity".

The closer your velocity is to 1, the better you are at estimation. This has absolutely no reflection on how good or bad a developer you are, just on how well do you estimate. All the velocities in your team are then input into a Monte Carlo prediction algorithm that attempts to predict when will your software be ready to release (see image).

You can then play with the parameters in order to get a more suitable release date. - Everything you can do through the web application is exposed through a REST API.

- The search engine is smart and versatile, allowing to report or focus on any criterion or field. It's also integrated into the browser as a search engine (saw it working on FireFox - I assume the same is available for IE7).

- EVERYTHING can be subscribed to through RSS. Even filters you create on issues.

- Full integration with source control systems, means that every bug fixed can show the filenames involved and any file name can show all the issues connected to it.

You can try FogBugz here.

As for meeting Joel, I found him every ounce as entertaining and knowledgeable as his blog persona.

He stuck around for an hour after the presentation to answer various questions.

My question was: in this post, he claimed that every measurement system designed to measure developers can and will be "gamed" in the end, because developers are ingenious and impervious to measurement. How does that sit with ESB?

His reply was that they've made every effort possible to make sure FogBugz will not yield individual-based reports, that ESB can only attest to a developer's estimation skills and that ultimately, it's what managers do with that data that counts.

If I were to paraphrase it, a-la the NRA: Data doesn't judge people. People judge people :).

We also heard a funny anecdote from his Microsoft development days, about the MS Project 1.0 team. They were forced by their managers to use Project in their project (eat their own dog food). The first Gantt chart they've produced, predicted that Project will be coming out in 2018. They were henceforth excused from using it on Project in any project... :)

Fanboy confession: I originally intended to ask Joel to autograph my copy of "Smart and Get Things Done", but kinda chickened out when I saw all the people around. Next time...

2 comments:

It's true that only "people judge people", but accurate data helps people to judge better.

Sadly, people are not as objective as computers. They can take a meaningless statistic (let's say, how many bugs were assigned to you last quarter) and deduce from this you're a bad developer - while, in fact, it means users are using your code more than that of other developers.

In my experience, when your manager reviews your performance, he already has his mind set up at the get go. All he needs now are some statistics to support his theory. I refer you to my Dark Data post, where I discuss how people use and discard data.

Bottom line: don't trust people with statistics.

Post a Comment